Skip to content

Share

Explore

Data strategy

Data strategy

Data strategy

Plan learning objectives, the timeframe of expected change, and data collection methodology.

Program Planning with Evaluation in Mind

@Program Planning Doc (demo)

Bloom’s Taxonomy and the Kirkpatrick Model

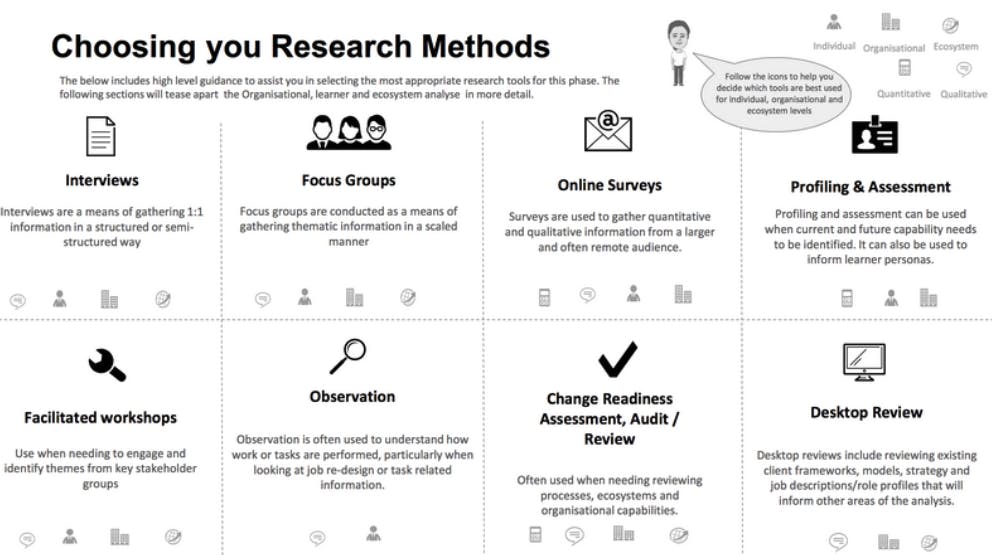

Data Collection Methods

Poorly Performing Programs

We've been fooled that classroom training is actually learning in the same way that we've chosen completion as a high metric of learning. It's simply presence and exposure. What we should be doing instead is fully understanding what people are trying to do and giving them support when they need it, tailored to their context and role. Let's measure efficacy not engagement

"Participants in corporate education programs often tell us that the context in which they work makes it difficult for them to put what they've taught into practice"

In most follow up studies after leadership learning and development programs, it was found that most supervisors had regressed to their pre-training views. If the system doesn't change, it will set people up to fail.

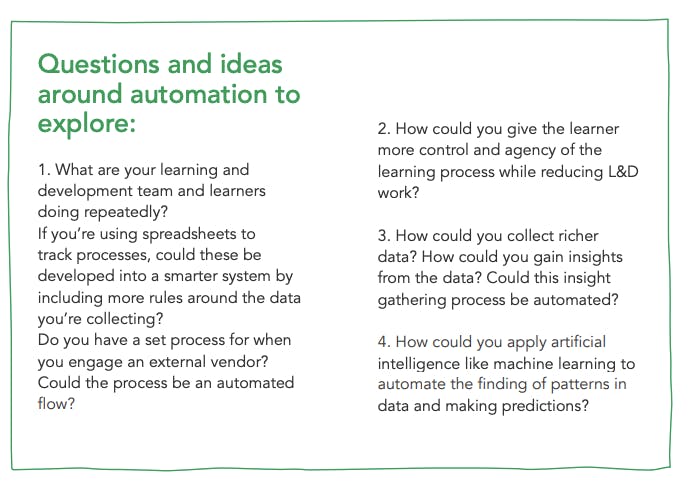

Data Experimentation

How does this apply to you?

Who

What

When

Why

What now?

Who

What

When

Why

What now?

Canva University

Use the Program Planning

to articulate your thought process behind planning out programs and the evaluation methods for it Before designing a program or when redesigning a program

To be clear about the intentions of the program: By planning all the details out the objectives, methods for evaluation and expected impact, then it’ll make measurement and reporting easier.

Use the Bloom’s / Kirkpatrick’s

to breakdown the metrics of your program and how you’ll evaluate successBefore designing a program or when redesigning a program

Use the Season Reporting Template to report back on the health of your program and goals. The global dashboard with our level 1 metrics should make this process easier

The last week of each season

To report back to our stakeholders around our programs

Escalate to or if any program demonstrates signs of poor performance e.g. 3 months of low engagement, lack of visibility over any changes etc.

@Alan Chowansky

@Polly Rose

Whenever you notice the first signs

To prevent programs from breaking

Learning Admin

Update the Global Learning Dashboard

Whenever new data comes in around attendance and satisfaction

Data Strategy Templates

Name

Description

Name

Description

1

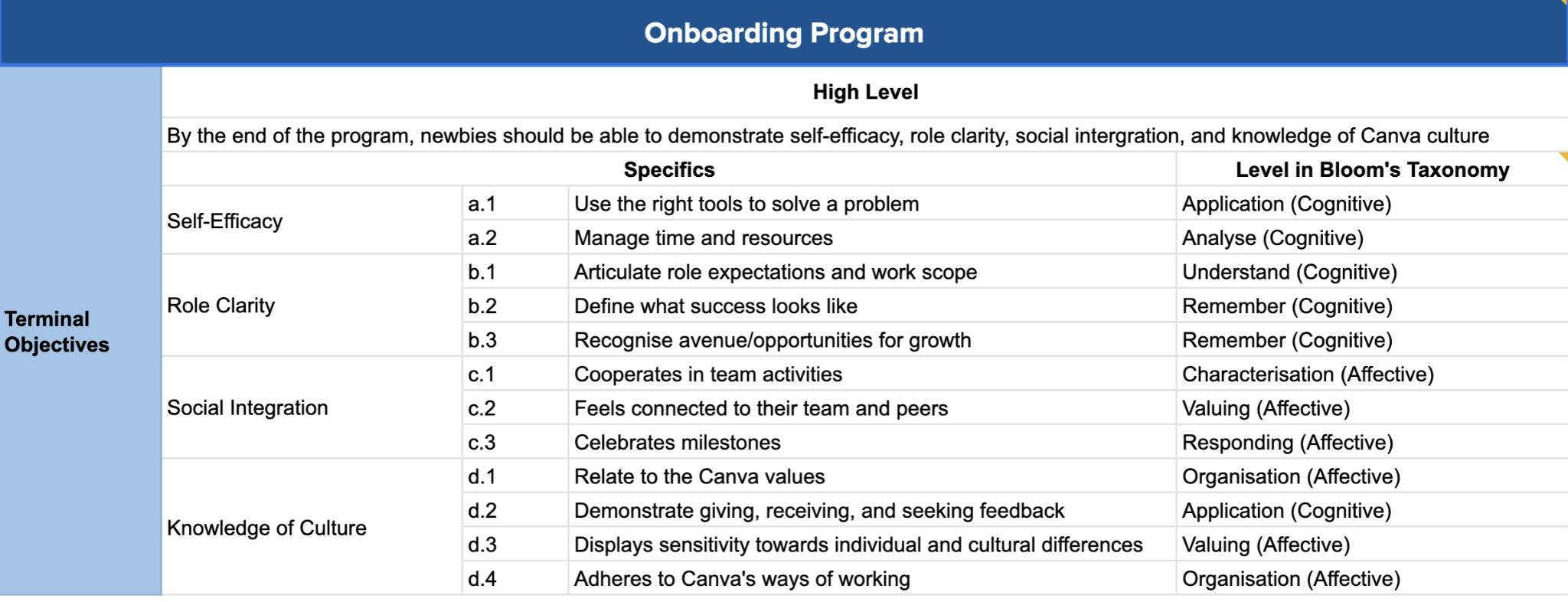

The purpose of the Program Planning doc is to plan and articulate the learning purpose and strategy for a Canva U program. Completing the doc will allow you to plan your work accordingly and ensure all Canva U programs are delivering the right outcomes in the most impactful way.

2

The Bloom’s Taxonomy and Kirkpatrick Model sheet will help you determine the objectives of your program / learning event and articulate measurable outcomes at each of the 4 Kirkpatrick levels.

3

The dashboards currently record attendance of all our sessions and programs.

No results from filter

Want to print your doc?

This is not the way.

This is not the way.

Try clicking the ⋯ next to your doc name or using a keyboard shortcut (

CtrlP

) instead.